Alibaba Qwen Team Releases Qwen3.5-397B MoE Model with 17B Functional Parameters and 1M Token Core for AI Agents

Alibaba Cloud recently revived the open source environment. Today, Qwen’s team was released Q3.5the youngest generation of their large language family (LLM). The most powerful version Qwen3.5-397B-A17B. This model is a fuzzy Mixture-of-Experts (MoE) system. It combines great thinking power with high efficiency.

Qwen3.5 is a traditional view language model. It is specifically designed for AI agents. It can see, code, and think everywhere 201 languages.

Core Architecture: 397B Value, 17B Active

Technical details of Qwen3.5-397B-A17B they are impressive. The model contains 397B context and boundaries. However, it uses a small MoE design. This means that it only works 17B parameters during one forward pass.

This 17B activation count is the most important number for devs. It allows the model to provide intelligence for a 400B model. But it runs at the speed of a much smaller model. The Qwen team reports that a 8.6x to 19.0x increased decoding compared to previous generations. This efficiency solves the high cost of using large AI.

A Functional Hybrid Architecture: Delta Agated Networks

Qwen3.5 does not use the standard Transformer design. It uses an ‘Efficient Hybrid Architecture.’ Most LLMs rely solely on attentional methods. These can be slow for long text. Qwen3.5 includes Gated Delta Networks (direct attention) with Mixed-Expertise (MoE).

The model contains 60 layers. The size of the hidden dimension is 4,096. These layers follow the ‘Hidden Structure.’ A structure combines layers into sets of 4.

- 3 block use Gated DeltaNet-plus-MoE.

- 1 block uses Gated Attention-plus-MoE.

- This pattern repeats itself 15 access times 60 layers.

Technical details include:

- Gated DeltaNet: It uses 64 attention heads in the Values (V) line. It uses 16 Queries and Keys (QK) headers.

- Structure of MoE: The model has 512 the experts are perfect. Each token is active 10 route experts and 1 shared expertise. This is equal 11 working professionals per token.

- Vocabulary: The model uses an integrated vocabulary of 248,320 tokens.

Native Multimodal Training: Early Fusion

Qwen3.5 i traditional visual language model. Many other models add the ability to see later. Qwen3.5 used ‘Early Fusion’ training. This means that the model is read from pictures and text at the same time.

The training used billions of multimodal tokens. This makes Qwen3.5 better at visual thinking than ever before Qwen3-VL translations. Very capable of doing ‘agency’ jobs. For example, it can look at a UI screenshot and generate the exact HTML and CSS code. It can also analyze long videos with second-rate accuracy.

The model supports the Model Context Protocol (MCP). It also handles complex function-calling. These features are important for building agents who manage applications or browse the web. Of IFBench test, score 76.5. This result exceeds many proprietary models.

Resolving Memory Wall: 1M Core Length

Long form data processing is a key feature of Qwen3.5. The base model has a native window of content 262,144 (256K) tokens. The host Qwen3.5-Plus version goes further. It supports 1M tokens.

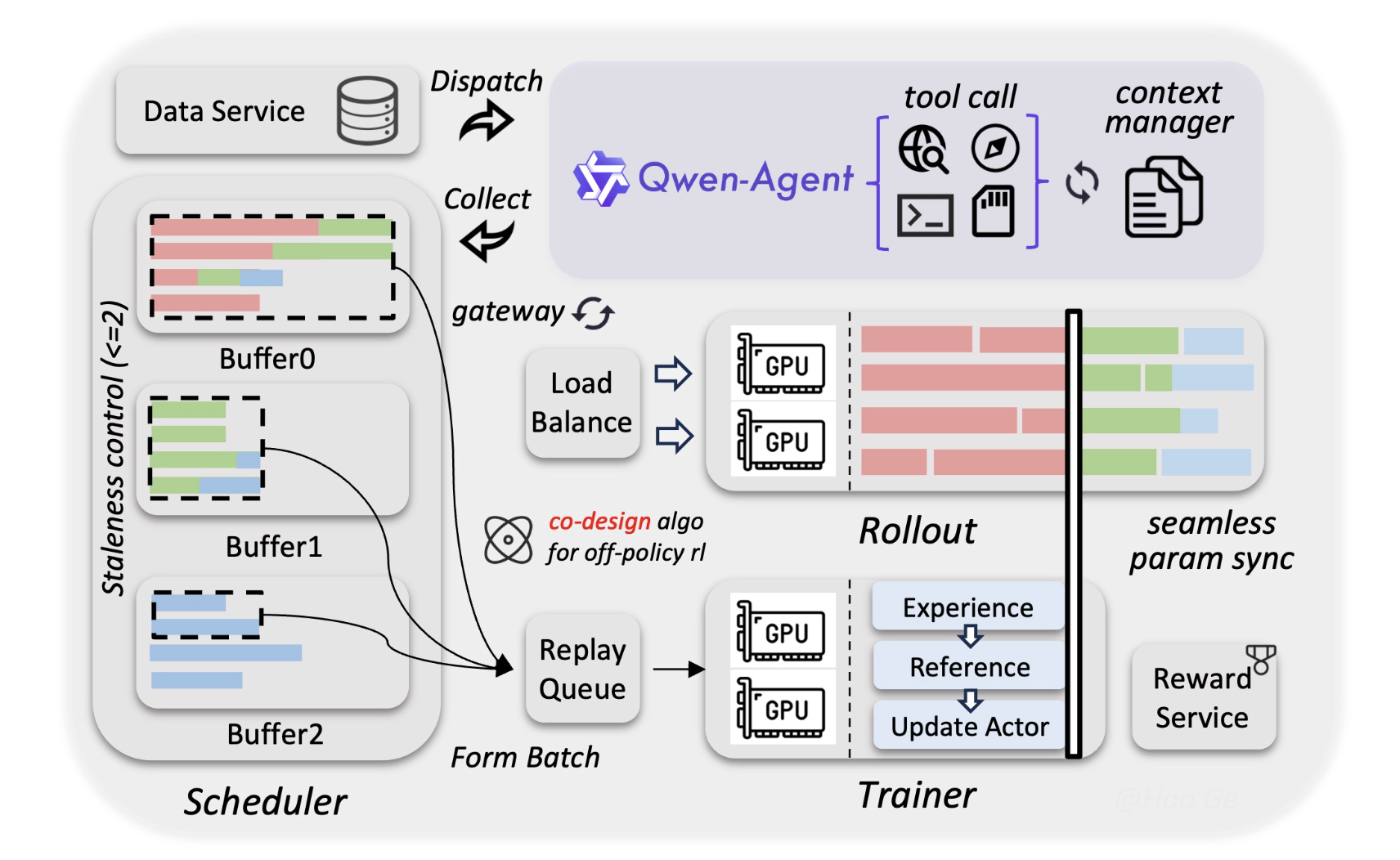

The Alibaba Qwen team used a new asynchronous Reinforcement Learning (RL) framework for this. It ensures that the model remains accurate even at the end of a 1M token document. For Devs, this means you can deploy the entire codebase in a single notification. You don’t always need a complex Retrieval-Augmented Generation (RAG) system.

Performance and Ratings

The model is the best in terms of technology. It received high scores Final Human Examination (HLE-Verified). This is a rough measure of AI knowledge.

- Coding: It features on par with top-tier closed source models.

- Statistics: The model uses ‘Flexible Tool Use.’ Can write Python code to solve math problems. It then uses a code to verify the response.

- Languages: It supports 201 different languages and dialects. This is a big jump from 119 languages in the previous version.

Key Takeaways

- Hybrid Efficiency (MoE + Gated Delta Networks): Qwen3.5 uses a 3:1 the ratio of Gated Delta Networks (direct attention) to normal Gated Attention blocks across 60 layers. This hybrid design allows the 8.6x to 19.0x increased decoding compared to previous generations.

- Large Scale, Low Footprint: I Qwen3.5-397B-A17B features 397B number of parameters but it only works 17B per token. You get it 400B-class intelligence with the indexing speed and memory requirements of a very small model.

- Native Multimodal Foundation: Unlike ‘constrained’ vision models, Qwen3.5 was trained with The first Fusion to trillions of text and image tokens simultaneously. This makes it a high-quality, goal-scoring visual agent 76.5 to IFBench following complex instructions in visual situations.

- 1M Token Content: While the basic model supports native 256k token context, hosted Qwen3.5-Plus handles until 1M tokens. This large window allows devs to process entire codebases or 2-hour videos without requiring complex RAG pipelines.

Check it out Technical specifications, Model weights again GitHub Repo. Also, feel free to follow us Twitter and don’t forget to join our 100k+ ML SubReddit and Subscribe to Our newspaper. Wait! are you on telegram? now you can join us on telegram too.