Ant Group Releases LingBot-VLA, a Language-Based Model for Real-World Robot Transformation

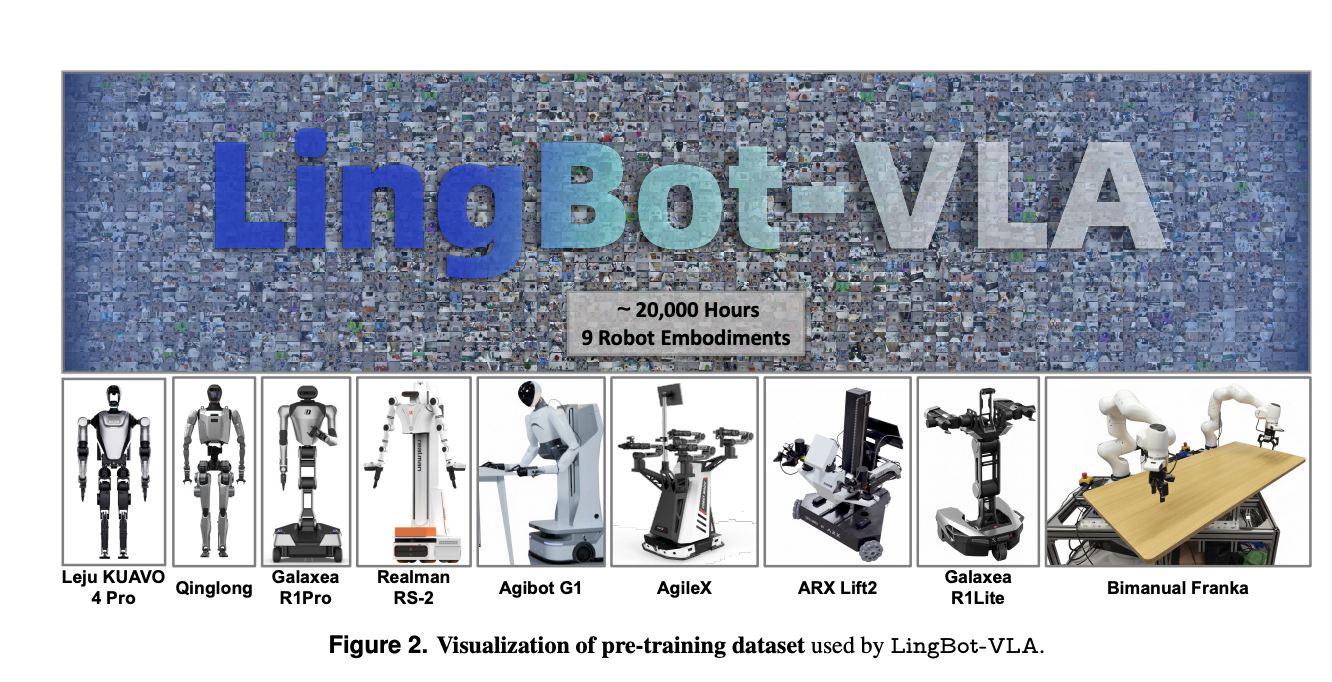

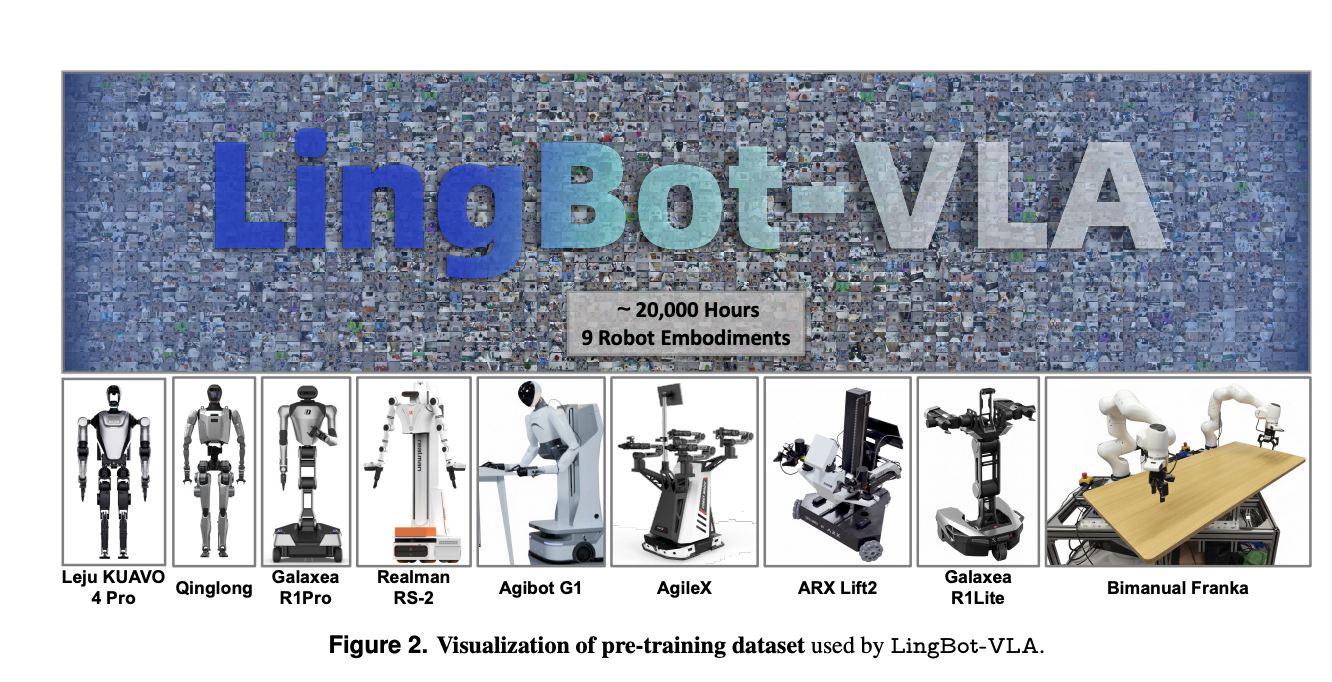

How do you build a single-vision language action model that can control many different binary robots in the real world? LingBot-VLA is the foundation model for Ant Group Robbyant’s new Vision Language Action that targets real-world robotic manipulation. It is trained on approximately 20,000 hours of teleoperated bimanual data collected from 9 two-arm robot simulations and tested on a large-scale GM-100 stand on three platforms. The model is designed to integrate cross morphology, data efficient post training, and high performance training on commodity GPU clusters.

Large scale data for two arms across 9 robot models

A pre-training data set was constructed from real-world phone applications in 9 popular dual-arm configurations. These include AgiBot G1, AgileX, Galaxea R1Lite, Galaxea R1Pro, Realman Rs 02, Leju KUAVO 4 Pro, Qinglong humanoid, ARX Lift2, and the Bimanual Franka setup. All systems have two arms of 6 or 7 degrees of freedom with parallel grippers and multiple RGB-D cameras providing multi-view monitoring.

Teleoperation uses the AgiBot G1 VR controller and the AgileX isomorphic arm controller. In each scene the recorded videos from all views are segmented by human annotators into clips corresponding to atomic actions. Free frames at the beginning and end of each clip are removed to reduce repetition. Work level and sub work level language instructions are then produced with Qwen3-VL-235B-A22B. This pipeline generates a synchronized sequence of images, instructions, and action channels for pre-training.

To illustrate the diversity of action the research team visualizes the most common atomic actions in training and testing in word clouds. About 50 percent of the atomic actions in the test set do not appear among the 100 most frequent actions in the training set. This gap ensures that testing emphasizes multitasking rather than frequency-based memorization.

Architecture, Mixture of Converters, and Flow Matching Functions

LingBot-VLA combines a robust multimodal backbone with action experts through a Mixture of Transformers architecture. The core of the vision language is Qwen2.5-VL. It encodes multi-view performance images and natural language instruction in a sequence of multimodal tokens. Correspondingly, the action expert receives the robot’s detection status and parts of the previous actions. Both branches share an attention module that models intelligent shared sequences in addition to action tokens.

At each time step the model creates a view sequence that includes tokens from the 3 camera views, the task instruction, and the robot state. An action sequence is a future action sequence with a time horizon set to 50 during pretraining. The goal of training is conditional flow matching. The model learns a vector field that transmits Gaussian noise to the ground truth action path in the form of linear probabilities. This provides a continuous action representation and produces a smooth, temporally consistent control suitable for two-arm manipulation.

LingBot-VLA uses block-based reasoning over shared sequences. View tokens can take care of each other in two ways. Action tokens can handle all view tokens and only past action tokens. This mask prevents the leakage of information from future actions to current observations while still allowing the action expert to use the full multimodal context at each decision step.

A spatial perspective with LingBot Depth distillation

Most VLA models struggle with depth perception when depth sensors fail or return false measurements. LingBot-VLA addresses this by integrating LingBot-Depth, a different spatial perception model based on Masked Depth Modeling. LingBot-Depth is trained in a self-supervised manner on a large RGB-D corpus and learns to reconstruct a dense depth metric when parts of the depth map are covered, often in regions where optical sensors tend to fail.

In LingBot-VLA visual queries from each camera view are matched to LingBot-Depth tokens by using a projection layer and lossy distillation. Cross-attention maps query the VLM in the hidden depth domain and training reduces their differences in LingBot-Depth features. This adds geometric awareness to the policy and improves the performance of tasks that require accurate 3D spatial thinking, such as placing, stacking, and folding under clutter and occlusion.

Real world benchmark of GM-100 on all 3 platforms

The main test uses the GM-100, a real-world benchmark with 100 manipulation tasks and 130 teleoperated filtered trajectories per task on each of the 3 hardware platforms. The test compares LingBot-VLA with π0.5GR00T N1.6, and WALL-OSS under the shared post training protocol. All methods tune well to public test sites with the same dataset, cluster size 256, and 20 epochs. Success Rate measures the completion of all sub-tasks within 3 minutes and Progress Score tracks component completion.

For GM-100, LingBot-VLA in depth achieves a mastery rate in all 3 fields. The average Success Rate is 17.30 percent and the Progress Rate is 35.41 percent. π0.5 it reaches 13.02 percent SR (success rate) and 27.65 percent PS (progress points). GR00T N1.6 and WALL-OSS are lower at 7.59 percent SR, 15.99 percent PS and 4.05 percent SR, 10.35 percent PS respectively. LingBot-VLA without depth already outperforms GR00T N1.6 and WALL-OSS and the depth variant adds additional benefits.

In the RoboTwin 2.0 simulation with 50 tasks, the models are trained on 50 demonstrations per task in clean scenes and 500 per task in random scenes. LingBot-VLA with depth achieves an average Success Rate of 88.56 percent on clean scenes and 86.68 percent on random scenes. π0.5 it reaches 82.74 percent and 76.76 percent in the same areas. This shows consistent benefits from parallel design and depth integration when domain randomization is strong.

Behavioral measurement and training for successful data submission

The research team analyzes scaling rules by changing prior training data from 3,000 to 20,000 hours in a subset of 25 occupations. Both Success Rate and Continuity Score increase proportionally with data volume, except for saturation at the largest scale studied. This is the first evidence that VLA models retain good scaling with real robot data of this size.

They also studied the effectiveness of post-training data on the AgiBot G1 using 8 tasks representative of the GM-100. With only 80 shows per task LingBot-VLA already exceeds π0.5 which uses the full 130 indicator set, for both Success Rate and Progression Score. As more trajectories are added the performance gap increases. This ensures that the pre-trained policy is transferred only in cases of specific areas of 100 jobs, which directly reduces the cost of adapting to new robots or jobs.

Operational training and open source toolkit

LingBot-VLA comes with a training stack optimized for multi-node efficiency. The codebase uses an FSDP-style strategy for parameterization and optimizer states, hybrid sharding for action experts, mixed-precision floating-point reduction and bfloat16 storage, and user-level acceleration with integrated attention caps and torch integration.

In an 8-GPU setup the research team reported an output of 261 samples per second for each GPU configuration for the Qwen2.5-VL-3B and PaliGemma-3B-pt-224 models. This corresponds to a speedup of 1.5 to 2.8 times compared to existing trending VLA codes such as StarVLA, Dexbotic, and OpenPI tested in the same benchmark based on Libero. Transfer rates are close to linear when going from 8 to 256 GPUs. The full post training toolkit is released as open source.

Key Takeaways

- LingBot-VLA is a theory-based language action model based on Qwen2.5-VL trained for nearly 20,000 hours of real-world dual arm telemetry across 9 robot embodiments, enabling cross morphology and generalization tasks.

- The model integrates LingBot’s Depth by using feature distillation so that the vision tokens correspond to the expert to complete the depth, which greatly improves the understanding of the 3D space for insertion, stacking, folding, and other sensitive geometry operations.

- In the GM-100 real-world benchmark, LingBot-VLA in depth achieves a 17.30 percent average success rate and a 35.41 percent average success rate, which is more than π0.5GR00T N1.6, and WALL OSS under the same post training protocol.

- LingBot-VLA shows good data performance in post training, as in AgiBot G1 it can exceed π0.5 using 130 shows per task while only using about 80 shows per task, and performance continues to improve as more trajectories are added.

Check it out Paper, Model Weight, Repo and Project Page. Also, feel free to follow us Twitter and don’t forget to join our 100k+ ML SubReddit and Subscribe to Our newspaper. Wait! are you on telegram? now you can join us on telegram too.