Google AI Releases Gemini 3.1 Pro with 1 million token core and 77.1 percent ARC-AGI-2 Reasons for AI Agents

Google has officially shifted the Gemini era into high gear with the release of the Gemini 3.1 Prothe first version updates to the Gemini series 3. This release is not just a small patch; is a targeted strike at the ‘agent’ AI market, focusing on cognitive stability, software engineering, and reliability of tool use.

For devs, this update marks a change. We’re moving from four ‘talk’ models to ‘action’ models. Gemini 3.1 Pro is designed to be the primary engine for autonomous agents that can navigate file systems, extract code, and think about scientific problems at a level of success that now rivals—and in some cases exceeds—the industry’s leading frontier models.

Large Total, Accurate Output

One of the fastest growing technologies is managing scale. Gemini 3.1 Pro Preview saves a lot 1M token the installation context window. To put this in perspective for application developers: now you can feed the model a medium-sized code repository, and it will have enough ‘memory’ to understand the dependencies of different files without losing structure.

However, the real news is this 65k token output limit. This 65k window is a significant leap for developers building long form generators. Whether you’re producing a 100-page technical manual or a complex, multi-module Python program, the model can now complete a task in one go without hitting the ‘big token’ wall.

Multiplication Down in Thinking

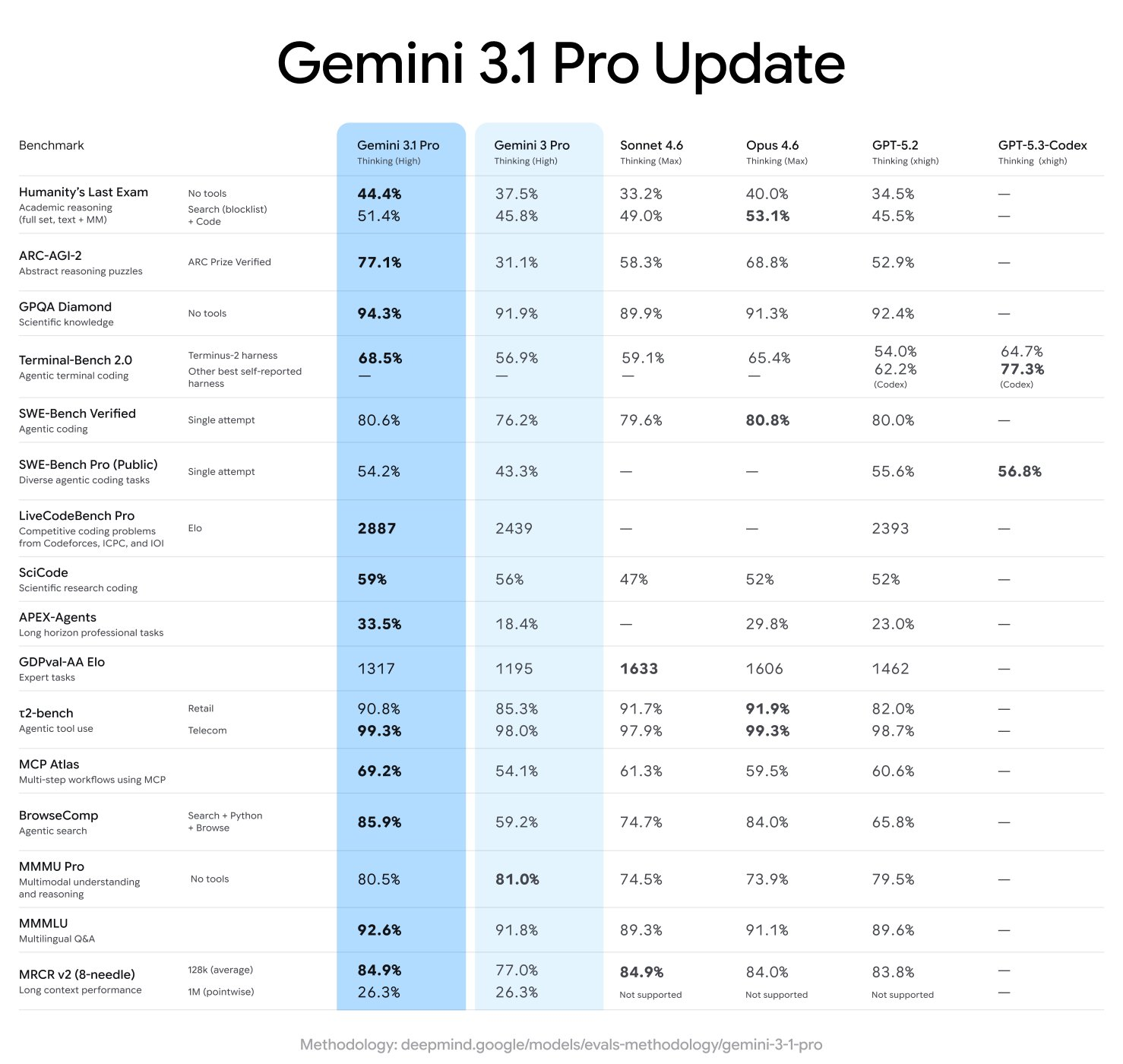

If Gemini 3.0 was about introducing ‘Critical Thinking,’ Gemini 3.1 is about making that thinking work. The performance jumps in the hard benchmarks are notable:

| Benchmark | The result | What it measures |

| ARC-AGI-2 | 77.1% | The ability to solve completely new logic patterns |

| GPQA Diamond | 94.1% | Graduate level scientific reasoning |

| SciCode | 58.9% | A Python program for scientific computing |

| Terminal-Bench Hard | 53.8% | Agent code and terminal usage |

| Final Human Examination (HLE) | 44.7% | Thinking against the limits close to people |

I 77.1% in ARC-AGI-2 is the title image here. The Google team says this represents more than double the imaging performance of the original Gemini 3 Pro. This means that the model is less likely to rely on pattern matching from its training data and is more able to ‘guess’ it when faced with a novel case in the dataset.

Agentic Toolkit: Custom Tools and ‘Antigravity‘

The Google team is making a clear game for the developer terminal. Along with the main model, they present a special end point: gemini-3.1-pro-preview-customtools.

This terminal is intended for developers who mix bash commands with custom functions. In previous versions, models often struggled to prioritize which tool to use, sometimes manipulating searches when reading a local file would have sufficed. I customtools the exception is specifically tuned to prioritize similar instruments view_file or search_codemaking it a more reliable backbone for independent coding agents.

This release also deeply integrates with Google Antigravitya new platform to develop the company’s agent. Developers can now use the new ‘Medium’ level of thinking. This allows you to change the ‘thinking budget’—using deep thinking for complex debugging while scaling back to medium or low for common API calls to save latency and cost.

API Break Changes and New File Paths

For those already building on the Gemini API, there is a small but critical breaking change. Of Interactions API v1betafield total_reasoning_tokens renamed to total_thought_tokens. This change coincides with the ‘thought signatures’ introduced in the Gemini 3 family—encrypted representations of the model’s internal thought that must be returned to the model to maintain the context of the evolving agent’s workflow.

Interest in data modeling has also grown. Important updates to file management include:

- 100MB File Limit: The previous 20MB cap for API uploads has been increased fivefold 100MB.

- Direct YouTube support: Now you can pass a YouTube URL directly as a media source. The model ‘views’ the video via URL rather than needing to be uploaded manually.

- Cloud Integration: Support for Cloud Storage Buckets and a private database of previously signed URLs as direct data sources.

The Economics of Intelligence

Pricing for the Gemini 3.1 Pro Preview remains aggressive. For information below 200k tokens, the installation fee is $2 for 1 million tokensand the output is $12 for 1 million. For contexts over 200k, the price equates to an input of $4 and an output of $18.

Compared to competitors like Claude Opus 4.6 or GPT-5.2, the Google team ranks Gemini 3.1 Pro as a ‘performance leader.’ According to the data from Transactional Analysisthe Gemini 3.1 Pro now holds the top spot in its Intelligence Index while costing about half as much to run as its closest peers.

Key Takeaways

- 1M/65K Large Content Window: The model maintains a 1M token large-scale data input window and databases, while greatly improving the output limit to 65k tokens long form coding and document generation.

- Leap in Logic and Reasoning: Working on ARC-AGI-2 benchmark achieved 77.1%which represents more than double the thinking power of previous versions. It also gained a 94.1% in GPQA Diamond for graduate-level scientific work.

- Dedicated Agentic endpoints: The Google team presented a special

gemini-3.1-pro-preview-customtoolsendpoint. It is specially prepared to be prioritized bash commands and system tools (likeview_fileagainsearch_code) to reliable private agents. - API Breaking Change: Developers should update their code bases as a field

total_reasoning_tokensrenamed tototal_thought_tokensin the v1beta Interactions API to better match the model’s internal “thought” process. - Advanced File and Media Management: API file size limit increased from 20MB to 100MB. Additionally, developers can now pass YouTube URLs directly into the notification, allowing the model to analyze the video content without needing to download or re-upload the files.

Check it out Technical details again Try it here. Also, feel free to follow us Twitter and don’t forget to join our 100k+ ML SubReddit and Subscribe to Our newspaper. Wait! are you on telegram? now you can join us on telegram too.