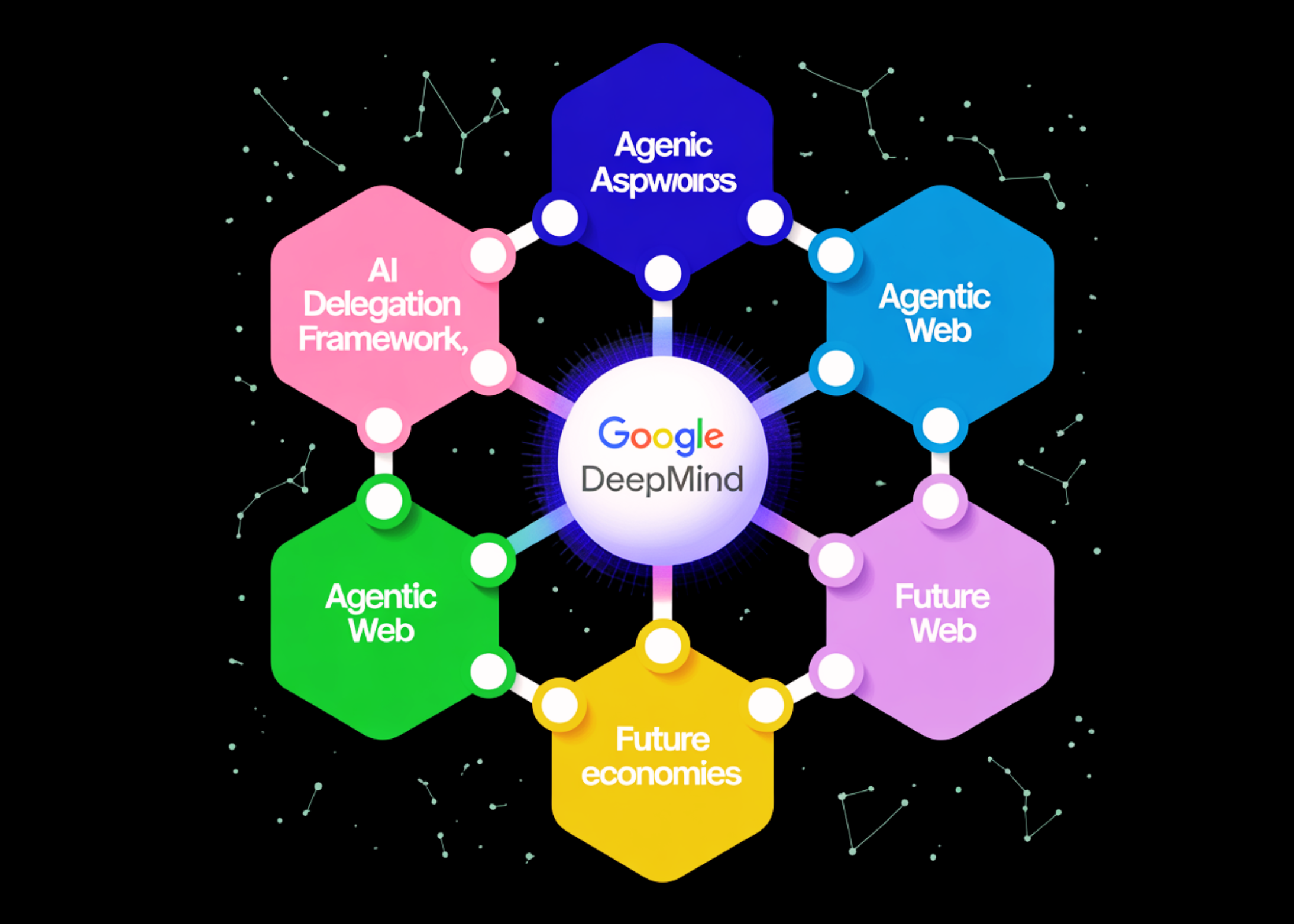

Google DeepMind Proposes New Framework for Intelligent AI Agents to Protect Future Economy’s Growing Agentic Web

The AI industry is currently focused on ‘agents’—autonomous systems that do more than just chat. However, many current multi-agent systems rely on rigid, hard-coded heuristics that fail when the environment changes.

Google DeepMind researchers have proposed a new solution. The research team argued that in order for the ‘agent web’ to grow, agents must go beyond a simple division of labor and implement human-like organizational principles such as authority, responsibility, and accountability.

Defining ‘Smart’ Shipping

In normal software, a subroutine is simply ‘exited’. Wise messengers it is different. It is a sequence of decisions in which the delegate transfers authority and responsibility to the delegate. This process involves risk assessment, power matching, and establishing trust.

5 frame pillars

To build this, the research team identified 5 main requirements mapped to specific technology principles:

| A frame pillar | Technology Implementation | The main job |

| Empirical Testing | Division of Labor and Assignment | The state of the passive agent and the power. |

| Flexible Manufacturing | Dynamic Linking | Handling context shifts and runtime failures. |

| Structural Transparency | Monitoring and Verification of Completion | To evaluate both the process and the end result. |

| Scalable Market | Trust and Reputation and Multi-Purpose Development | Effective, trusted communication in open markets. |

| System Durability | Security and Administrative Permissions | To prevent cascading failures and malicious use. |

Engineering Strategy: The ‘Contract-First’ Breakdown

A very important change contract-first decomposition. Under this principle, the agent offers the job only if the result can be accurately confirmed.

If a task is too dependent or too complex to be verified—like writing a ‘compelling research paper’—the system must complete it repeatedly. This continues until the underlying functions match the available validation tools, such as unit tests or formal mathematical proofs.

Iterative Verification: Chain of Custody

In a series of diplomatic missions, such as this 𝐴 → 𝐵 → 𝐶accountability is changing.

- Agent B is responsible for ensuring the work of C.

- When Agent B returns the result to Ait must provide a full chain of cryptographically signed proofs.

- Agent A then perform a two-step test: verify Bdirect work and ensure that B well confirmed C.

Security: Tokens and Tunnels

Scaling these chains presents major security risks, including Data Release, Backdoor Implantingagain Model Domain.

To protect the network, the DeepMind team suggests Shipping Power Tokens (DCTs). Based on the same technology Macaroons or Biscuitsthese tokens use ‘cryptographic caveats’ to enforce the principle of least privilege. For example, an agent might receive a token that allows it to READ a specific Google Drive folder but disallows any WRITE operations.

Examining Current Protocols

The research team analyzed whether current industry standards are suitable for this framework. While these principles provide a foundation, they all have ‘missing pieces’ for senior executive deployments.

- MCP (Model Content Protocol): It measures how models connect to tools. Gap: It does not have a policy layer to govern permissions across deep delivery chains.

- A2A (Agent-to-Agent): Manages acquisition and job life cycles. Gap: It does not have established Zero-Knowledge Proofs (ZKPs) or digital signature chains.

- AP2 (Agent Payments Protocol): It authorizes agents to spend money. Gap: Cannot ensure quality of work before issuing payment.

- UCP (Universal Commerce Protocol): It measures the commercial activity. Gap: It’s for shopping/filling, not abstract computer work.

Key Takeaways

- Move Beyond Heuristics: Current AI agents rely on simple heuristics, which are hard-coded and cannot dynamically adapt to environmental changes or unexpected failures. Smart delegation requires a flexible framework that includes delegation of authority, responsibility, and accountability.

- ‘Contract-First’ Career Breakdown: For complex purposes, exporters should use the ‘first contract’ method, where tasks are broken down into smaller units with specialized, automated verification capabilities, such as unit testing or formal proofing.

- Transitive Accountability in Chains: In long supply chains (eg, 𝐴 → 𝐵 → 𝐶), the bond changes. Agent B is responsible for C’s work, and Agent A must verify both B’s direct work and that B has accurately verified C’s testimony.

- Reduced Security With Tokens: To prevent system breaches and the ‘confused pin problem,’ agents should use Dispatching Power Tokens (DCTs) that provide reduced authorization. This ensures that agents operate under the principle of least privilege, with limited access to specific sets of resources and valid operations.

Check it out Paper here. Also, feel free to follow us Twitter and don’t forget to join our 100k+ ML SubReddit and Subscribe to Our newspaper. Wait! are you on telegram? now you can join us on telegram too.

Michal Sutter is a data science expert with a Master of Science in Data Science from the University of Padova. With a strong foundation in statistical analysis, machine learning, and data engineering, Michal excels at turning complex data sets into actionable insights.