How to Build a Memory System for Long-Term Scheduling AI Consulting Agents

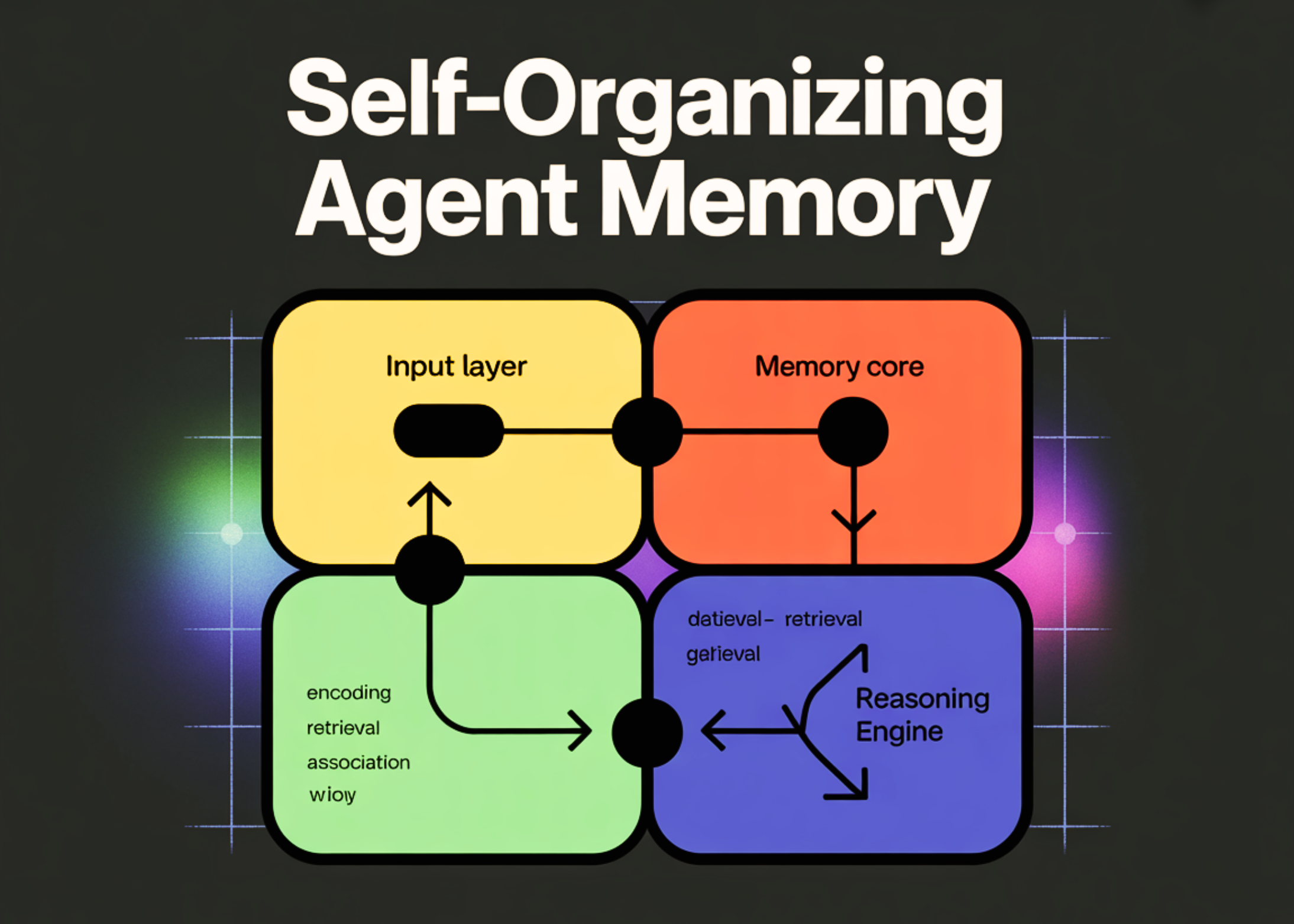

In this tutorial, we build a self-organizing memory system for an agent that goes beyond storing a raw conversational history and instead builds interactions into continuous, meaningful units of information. We design the system so that logic and memory management are clearly separated, allowing a dedicated component to retrieve, compress, and organize information. At the same time, the main agent is focused on responding to the user. We use structured storage with SQLite, location-based aggregation, and summary aggregation, and show how an agent can store useful content in remote locations without relying on light vector detection alone.

import sqlite3

import json

import re

from datetime import datetime

from typing import List, Dict

from getpass import getpass

from openai import OpenAI

OPENAI_API_KEY = getpass("Enter your OpenAI API key: ").strip()

client = OpenAI(api_key=OPENAI_API_KEY)

def llm(prompt, temperature=0.1, max_tokens=500):

return client.chat.completions.create(

model="gpt-4o-mini",

messages=[{"role": "user", "content": prompt}],

temperature=temperature,

max_tokens=max_tokens

).choices[0].message.content.strip()Set up the main runtime by importing all required libraries and securely collecting the API key at runtime. We implement a client language model and define a single helper function that balances all model calls. We ensure that every part of the stream depends on this shared interface with consistent generation behavior.

class MemoryDB:

def __init__(self):

self.db = sqlite3.connect(":memory:")

self.db.row_factory = sqlite3.Row

self._init_schema()

def _init_schema(self):

self.db.execute("""

CREATE TABLE mem_cells (

id INTEGER PRIMARY KEY,

scene TEXT,

cell_type TEXT,

salience REAL,

content TEXT,

created_at TEXT

)

""")

self.db.execute("""

CREATE TABLE mem_scenes (

scene TEXT PRIMARY KEY,

summary TEXT,

updated_at TEXT

)

""")

self.db.execute("""

CREATE VIRTUAL TABLE mem_cells_fts

USING fts5(content, scene, cell_type)

""")

def insert_cell(self, cell):

self.db.execute(

"INSERT INTO mem_cells VALUES(NULL,?,?,?,?,?)",

(

cell["scene"],

cell["cell_type"],

cell["salience"],

json.dumps(cell["content"]),

datetime.utcnow().isoformat()

)

)

self.db.execute(

"INSERT INTO mem_cells_fts VALUES(?,?,?)",

(

json.dumps(cell["content"]),

cell["scene"],

cell["cell_type"]

)

)

self.db.commit()We define a structured memory database that persists information across interactions. We create tables of atomic memory units, high-level views, and a full-text search index to enable symbolic retrieval. We also use logic to insert new memory entries in a regular and questionable manner.

def get_scene(self, scene):

return self.db.execute(

"SELECT * FROM mem_scenes WHERE scene=?", (scene,)

).fetchone()

def upsert_scene(self, scene, summary):

self.db.execute("""

INSERT INTO mem_scenes VALUES(?,?,?)

ON CONFLICT(scene) DO UPDATE SET

summary=excluded.summary,

updated_at=excluded.updated_at

""", (scene, summary, datetime.utcnow().isoformat()))

self.db.commit()

def retrieve_scene_context(self, query, limit=6):

tokens = re.findall(r"[a-zA-Z0-9]+", query)

if not tokens:

return []

fts_query = " OR ".join(tokens)

rows = self.db.execute("""

SELECT scene, content FROM mem_cells_fts

WHERE mem_cells_fts MATCH ?

LIMIT ?

""", (fts_query, limit)).fetchall()

if not rows:

rows = self.db.execute("""

SELECT scene, content FROM mem_cells

ORDER BY salience DESC

LIMIT ?

""", (limit,)).fetchall()

return rows

def retrieve_scene_summary(self, scene):

row = self.get_scene(scene)

return row["summary"] if row else ""We focus on memory retrieval and spatial processing. We implement secure full-text search by deleting user queries and adding a fallback strategy if no word match is found. We also disclose helper methods for fetching aggregated field summaries for long-horizon construction.

class MemoryManager:

def __init__(self, db: MemoryDB):

self.db = db

def extract_cells(self, user, assistant) -> List[Dict]:

prompt = f"""

Convert this interaction into structured memory cells.

Return JSON array with objects containing:

- scene

- cell_type (fact, plan, preference, decision, task, risk)

- salience (0-1)

- content (compressed, factual)

User: {user}

Assistant: {assistant}

"""

raw = llm(prompt)

raw = re.sub(r"```json|```", "", raw)

try:

cells = json.loads(raw)

return cells if isinstance(cells, list) else []

except Exception:

return []

def consolidate_scene(self, scene):

rows = self.db.db.execute(

"SELECT content FROM mem_cells WHERE scene=? ORDER BY salience DESC",

(scene,)

).fetchall()

if not rows:

return

cells = [json.loads(r["content"]) for r in rows]

prompt = f"""

Summarize this memory scene in under 100 words.

Keep it stable and reusable for future reasoning.

Cells:

{cells}

"""

summary = llm(prompt, temperature=0.05)

self.db.upsert_scene(scene, summary)

def update(self, user, assistant):

cells = self.extract_cells(user, assistant)

for cell in cells:

self.db.insert_cell(cell)

for scene in set(c["scene"] for c in cells):

self.consolidate_scene(scene)We use a dedicated memory management component responsible for programming the experience. We extract collective memory representations from interactions, store them, and periodically combine them into stable snapshots of the scene. We ensure that the memory changes continuously without interrupting the agent’s response flow.

class WorkerAgent:

def __init__(self, db: MemoryDB, mem_manager: MemoryManager):

self.db = db

self.mem_manager = mem_manager

def answer(self, user_input):

recalled = self.db.retrieve_scene_context(user_input)

scenes = set(r["scene"] for r in recalled)

summaries = "n".join(

f"[{scene}]n{self.db.retrieve_scene_summary(scene)}"

for scene in scenes

)

prompt = f"""

You are an intelligent agent with long-term memory.

Relevant memory:

{summaries}

User: {user_input}

"""

assistant_reply = llm(prompt)

self.mem_manager.update(user_input, assistant_reply)

return assistant_reply

db = MemoryDB()

memory_manager = MemoryManager(db)

agent = WorkerAgent(db, memory_manager)

print(agent.answer("We are building an agent that remembers projects long term."))

print(agent.answer("It should organize conversations into topics automatically."))

print(agent.answer("This memory system should support future reasoning."))

for row in db.db.execute("SELECT * FROM mem_scenes"):

print(dict(row))We describe a worker agent that performs reasoning while maintaining memory. We find relevant scenes, compile contextual summaries, and generate responses based on long-term knowledge. We then close the loop by passing the interaction back to the memory manager so that the system can continuously improve over time.

In this tutorial, we showed how an agent can organize its memory and turn past interactions into stable, reusable information instead of ephemeral conversation logs. We have enabled memory to improve by combining selective recall, which supports consistent and grounded thinking across time. This approach provides a practical foundation for building long-lived agent systems, and can be naturally extended with methods of forgetting, rich relational memory, or graph-based orchestration as the system grows in complexity.

Check it out Full Codes. Also, feel free to follow us Twitter and don’t forget to join our 100k+ ML SubReddit and Subscribe to Our newspaper. Wait! are you on telegram? now you can join us on telegram too.