Kyutai Releases Hibiki-Zero: A3B Parameter for Simultaneous Speech-to-Speech Translation Using GRPO Reinforcement Learning Without Any Word-Level Aligned Data

Kyutai released Hibiki-Zeroa new model for simultaneous speech-to-speech translation (S2ST) and speech-to-text translation (S2TT). The system translates the source speech into the target language in real time. It handles non-monotonic term dependencies in the process. Unlike previous models, Hibiki-Zero does not require word-level aligned data for training. This eliminates a major bottleneck in scaling AI translations into multiple languages.

Traditional methods rely on supervised training and word-level alignment. This alignment is difficult to collect at scale. Developers often rely on artificial alignment and language-specific heuristics. Hibiki-Zero overcomes this difficulty by using a novel reinforcement learning (RL) strategy to optimize latency.

Multistream Architecture

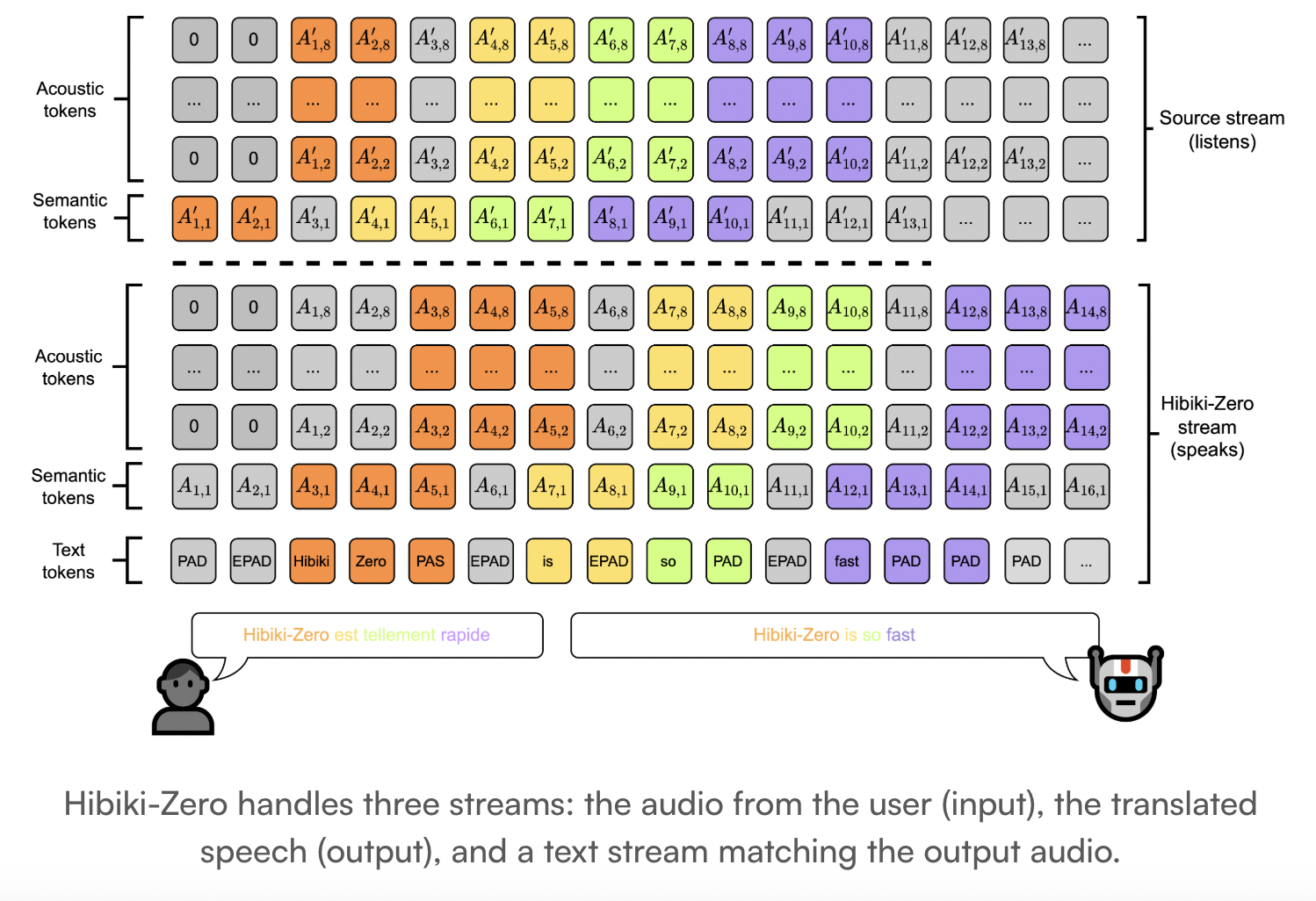

Hibiki-Zero is a decoder model only. It uses a multistream architecture to model a sequence of shared tokens. The model holds 3 direct streams:

- Source stream: Audio tokens from the input speech.

- Targeted streaming: Made sound tokens in translated speech.

- Inner Monologue: A stream of concatenated text tokens that match the target audio.

The system uses the Me neural audio codec. Mimi is a cause and effect codec that combines waveforms into separate tokens. It works with a framerate of 12.5 Hz. The model uses the RQ-Transformer to model this sound stream.

Architectural details include:

- Absolute Parameters: 3b.

- Temporary Transformer: 28 layers with 2048 hidden dimensions.

- Depth Transformer: 6 layers per codebook with 1024 hidden dimensions.

- Content Window: 4 min.

- Audio Codebooks: 16 levels of high quality speech.

Training Without Human Translation Data

Hibiki-Zero is trained in 2 main stages:

- Intensive Training Training: The model is first trained on sentence-level aligned data. This data confirms that ith the sentence in the guide is the translation of the word ith sentence in the source. The research team uses an artificial silencing technique in the target speech to delay its content relative to the source.

- Reinforcement Learning (RL): The model is used Group Relative Policy Optimization (GRPO) refining its policy. This section reduces translation delays while maintaining quality.

The RL process uses consider the rewards based only BLEU score. Calculates average rewards for multiple locations during translation. The hyperparameter ⍺ balances the trade-off between speed and accuracy. A lower ⍺ reduces latency but may slightly degrade quality.

It reaches Italian at the time of recording

The researchers showed how easily Hibiki-Zero adapts to new languages. They added Italian as an input language using less than 1000h of speech data.

- They carry out supervised repairs that follow the GRPO process.

- The model has reached a trade-off of quality and latency similar to the Meta It’s seamless model.

- It has surpassed Seamless with speaker uniformity in finish 30 points.

Performance and Results

Hibiki-Zero achieves excellent results in all 5 X-to-English tasks. Tested on Sound-NTREX-4L long form benchmark, covering 15h of speech per TTS program.

| Metric | Hibiki-Zero (French) | Seamless (French) |

| ASR-BLEU (↑) | 28.7 | 23.9 |

| Speaker compatibility (↑) | 61.3 | 44.4 |

| Average Lag (LAAL) (↓) | 2.3 | 6.2 |

In short form tasks (Europarl-ST), Hibiki-Zero reached ASR-BLEU 34.6 with a lag of 2.8 seconds. Human ratings also found a higher model than the natural bases of speech and voice transmission.

Key Takeaways

- Zero Aligned Data Requirement: Hibiki-Zero eliminates the need for expensive, hand-built word-level alignment between source and target speech, which was previously a major barrier to simulating simultaneous translation into new languages.

- GRPO-Driven Latency Optimization: The model uses Group Relative Policy Optimization (GRPO) and a simple reward system based only on BLEU scores to automatically learn an efficient translation policy, measuring high translation quality and low latency.

- A Coarse-to-Fine Training Strategy: The training pipeline starts with aligned sentence-level data to train the model’s base interpretation during high latency, followed by a reinforcement learning phase that “teaches” the model when to speak and when to listen.

- The Supreme Voice and Nature: In benchmarking against previous high-end systems like Seamless, Hibiki-Zero achieved a 30-point lead in speaker uniformity and the highest scores in speech environment and sound quality across five language functions.

- Acquiring a New Language Fast: The architecture is very portable; researchers have shown that Hibiki-Zero can be adapted to a new input language (Italian) with less than 1,000 hours of speech data while maintaining its original performance in other languages.

Check it out Paper, technical information, Repo and samples. Also, feel free to follow us Twitter and don’t forget to join our 100k+ ML SubReddit and Subscribe to Our newspaper. Wait! are you on telegram? now you can join us on telegram too.