Meet OAT: A New Action Tokenizer Bringing LLM-Style Scaling and Flexible, Anytime Inference to the Robotics World

Robots enter their GPT-3 era. For years, researchers have been trying to train robots using the same autoregressive (AR) models that power large-scale linguistic models (LLMs). If the model can predict the next word in a sentence, it should be able to predict the next movement of the robot arm. However, a technical wall has blocked this progress: continuous robot movements are difficult to turn into clear tokens.

A team of researchers from Harvard University and Stanford University released a new framework called Order for Action Tokenization (OAT) to fill this gap.

The Messy Reality of Robot Actions

Tokenization converts complex data into sequences of discrete numbers (tokens). In robots, these actions are continuous signals like joint angles. Previous strategies have had fatal flaws:

- Binning: It turns the entire course of action into a ‘barrel.’ Although it is simple, it creates a large sequence that makes training and telling slow.

- FAST (Common Space Sequence Token): It uses mathematics to compress motion into frequency coefficients. It is fast but often produces ‘uncodable’ sequences where small errors cause the robot to stop or move unexpectedly.

- Latent Tokenizer read: These use a learned ‘dictionary’ of movements. They are safe but have no particular order, meaning that the model treats early and late tokens as equally important.

The Three Golden Rules of OAT

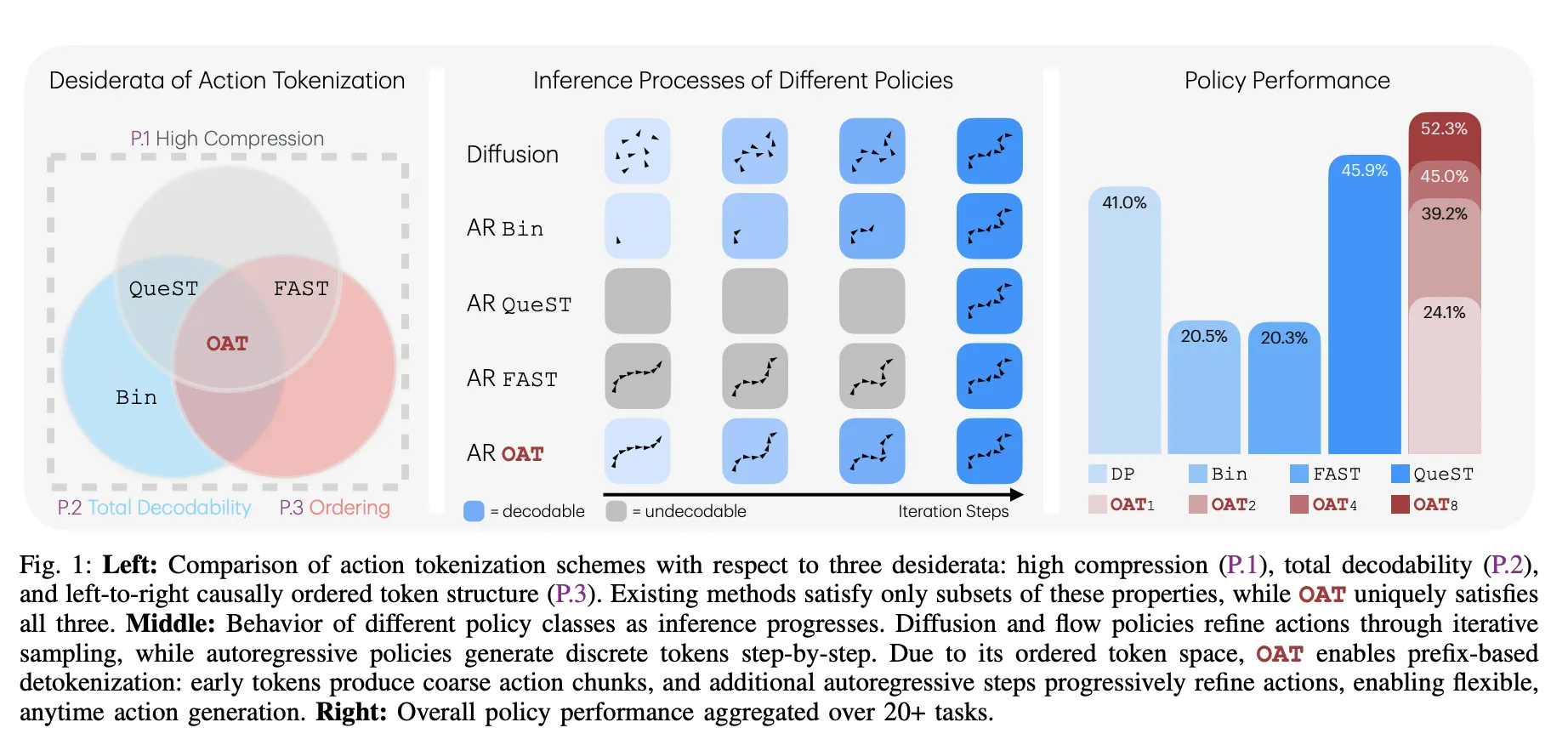

The research team identified 3 key elements—desiderata—for a functional robot token:

- High Pressure (P.1): The sequence of tokens should be short to keep the models efficient.

- Total Bankruptcy (P.2): The decoder must be an exhaustive function, which ensures all possible mappings of token sequences to valid flows.

- Order of reason (P.3): Tokens should have a left-to-right structure where early tokens capture ground motion and later tokens refine the details.

Secret Sauce: Nested Dropout and registers

OAT uses a transformer encoder with register tokens to summarize the parts of the action. To force the model to learn the ‘important’ things first, the research team used a method called creativity Nested Dropout.

Violation of Standards

The research team tested OAT across 20+ tasks in 4 major simulation benchmarks. OAT has consistently outperformed the industry standard Distribution Policy (DP) and previous tokens.

Performance Results

| Benchmark | OAT success rate | DP Success Rate | Bin Token Count | The highest value of OATs |

| LIBERO | 56.3% | 36.6% | 224 | 8 |

| RoboMimic | 73.1% | 67.1% | 224 | 8 |

| MetaWorld | 24.4% | 19.3% | 128 | 8 |

| RoboCasa | 54.6% | 54.0% | 384 | 8 |

‘Anytime’ Definition: Speed vs. Precision

The highest value of OATs prefix-based detokenization. Since the tokens are ordered by priority, you can set up the model in advance.

- Major Actions: Recording 1 or 2 tokens gives the robot a quick general reference, useful for low latency tasks.

- Good Practices: Generating all 8 tokens provides the high precision data required for complex installations.

This allows for a smooth trade-off between computational cost and operational reliability that fixed-length tokens could not provide.

Key Takeaways

- Solving the Token Gap: OAT addresses a fundamental limitation in applying automated models to robotics by introducing a learned token that simultaneously achieves high compression, complete decoding, and logical order.

- Ordered Representation with Nested Dropout: By using nested outliers during training, OAT forces the model to prioritize global, coarse-grained patterns in early tokens while retaining later tokens for finer refinement.

- Total Separation and Trust: Unlike previous frequency domain methods such as FAST, OAT ensures that the detokenizer is a complete operation, meaning that every successive token generates a valid part of the action, preventing runtime failures.

- Flexible ‘Anytime’ Definition: The ordered structure enables trigger-based coding, allowing robots to perform coarse operations from just one or two tokens to save counting or a full sequence of eight tokens for high-precision operations.

- Top Performance in All Benchmarks: Automated policies implemented by OAT consistently outperform broadcast-based and other tokenization schemes, achieving a combined success rate of 52.3% and superior results in real-world ‘Pick & Place’ and ‘Cups of Stacks’ operations.

Check it out Paper, Repo and project page. Also, feel free to follow us Twitter and don’t forget to join our 100k+ ML SubReddit and Subscribe to Our newspaper. Wait! are you on telegram? now you can join us on telegram too.

Michal Sutter is a data science expert with a Master of Science in Data Science from the University of Padova. With a strong foundation in statistical analysis, machine learning, and data engineering, Michal excels at turning complex data sets into actionable insights.