Performance testing of Google’s new AI

Just 3 months after the release of their latest model Gemini 3 Pro, Google DeepMind is here with its latest iteration: Gemini 3.1 Pro.

A major upgrade in terms of capabilities and safety, the Gemini 3.1 Pro model strives to be accessible and usable by all. Regardless of your choice, platform, purchasing power, the model has a lot to offer all users.

I will be exploring the capabilities of the Gemini 3.1 Pro and will elaborate on its key features. From how to access the Gemini 3.1 Pro to benchmarks, everything about this new model is covered in this article.

Gemini 3.1 Pro: What’s new?

The Gemini 3.1 Pro is the latest member of the Gemini model family. As always the model comes with an amazing number of features and improvements from the past. Some of the most notable are:

- 1 Million Content Window: It maintains an industry-leading input capacity of 1 million tokens, allowing it to process more than 1,500 pages of text or entire blocks of code in a flash.

- Advanced Thinking Practice: It delivers more than double the imaging performance of the Gemini 3 Pro, scores 77.1% in the ARC-AGI-2 benchmark.

- Improved Agent Reliability: Specially optimized for automated workflows, including an API endpoint (gemini-3.1-pro-preview-customtools) for precision tool orchestration and bash execution.

- Price: The cost/token of the latest model is the same as its predecessor. For those who are familiar with the Pro variant, they get a free upgrade.

- Advanced Vibe Writing: The model captures visual codes very well. It can generate web-friendly SVGs, which only animate in code, which means clear scaling and small file sizes.

- Hallucinations: Gemini 3.1 Pro has directly addressed the problem of hallucinations by reducing the rate of hallucinations from 88% to 50% across the AA-Omniscience: Knowledge and Hallucination Benchmark

- Granular Thinking: The model adds more granularity to the imaging option offered by its predecessor. Now users can choose between high, medium and low imaging parameters.

| The level of thinking | Gemini 3.1 Pro | Gemini 3 Pro | Gemini 3 Flash | Explanation |

| A little | It is not supported | It is not supported | Supported |

It’s like a no-brainer setting in most questions. The model may think less about complex coding tasks. Reduces lag in conversations or high-end applications. |

| Down | Supported | Supported | Supported |

Reduces delays and costs. It’s great for following simple commands or high-output applications. |

| In the middle | Supported | It is not supported | Supported |

Multitasking balanced thinking. |

| At the top | Supported (Default, Dynamic) | Supported (Default, Dynamic) | Supported (Default, Dynamic) |

Increase the depth of thinking. It may increase latency, but the output is carefully considered. |

Hands: Let’s have fun

All the talk in the world would be for naught if performance falls short of performance. In order to properly test the Gemini 3.1 Pro, I tested it in three stages:

- Complex thinking

- Coding and debugging

- Long content integration

Activity 1: Multi-Step Logical Reasoning

What is tested is: Sequential thinking, managing boundaries, and resisting hallucinations.

Notify:

“You are given the following situation:

Five analysts – A, B, C, D, and E – are assigned to three projects: Alpha, Beta, and Gamma.

Rules:

1. Each project must have at least one reviewer.

2. UA cannot work with C.

3. B should be assigned to the same project as D.

4. UE cannot be in Alpha.

5. No project can have more than three analysts.

Question: List all valid combinations of assignments. Show your reasoning clearly and make sure no rules are broken.

“

Answer:

Gemini 3.1 Pro handles constraint-heavy logic without getting bogged down in contradictions, which is where most models stumble. Consistency and clarity in calculating valid combinations showed a depth of critical thinking.

Activity 2: Code Generation & Refactoring

What is tested is: Systematic thinking, edge-case awareness, code integrity, and production realism.

Notify:

“You are given the following Python function:

def process_orders(orders):

total = 0

for order in orders:

if order["status"] == "completed":

total += order["amount"]

return totalRepeat this exercise to:

1. Manage lost keys safely.

2. Ignore negative values.

3. The log skipped invalid entries.

4. Prepare for production with type strategies and docstrings.

5. Include unit tests for critical situations.

Return only clean, well-formed Python code.

“

Answer:

import logging

import unittest

from typing import List, Dict, Any

logger = logging.getLogger(__name__)

def process_orders(orders: List[Dict[str, Any]]) -> float:

"""

Process a list of order dictionaries and calculate

the total amount of completed orders.

"""

total: float = 0.0

if not isinstance(orders, list):

logger.error("'orders' must be a list.")

return total

for index, order in enumerate(orders):

if not isinstance(order, dict):

logger.warning("Skipping index %d: Not a dictionary.", index)

continue

if order.get("status") != "completed":

continue

if "amount" not in order:

logger.warning("Skipping index %d: Missing 'amount'.", index)

continue

amount = order["amount"]

if not isinstance(amount, (int, float)) or isinstance(amount, bool):

logger.warning("Skipping index %d: 'amount' must be numeric.", index)

continue

if amount < 0:

logger.warning("Skipping index %d: Negative amount (%.2f).", index, amount)

continue

total += float(amount)

return total

class TestProcessOrders(unittest.TestCase):

def setUp(self):

logging.disable(logging.WARNING)

def tearDown(self):

logging.disable(logging.NOTSET)

def test_valid_completed_orders(self):

orders = [

{"status": "completed", "amount": 100},

{"status": "completed", "amount": 50.5}

]

self.assertEqual(process_orders(orders), 150.5)

def test_ignore_pending_orders(self):

orders = [

{"status": "pending", "amount": 100},

{"status": "completed", "amount": 50}

]

self.assertEqual(process_orders(orders), 50.0)

def test_missing_keys_skipped(self):

orders = [

{"amount": 100},

{"status": "completed"},

{"status": "completed", "amount": 20}

]

self.assertEqual(process_orders(orders), 20.0)

def test_negative_amounts_ignored(self):

orders = [

{"status": "completed", "amount": -10},

{"status": "completed", "amount": 3The reworked code felt productive, not gaming level. It anticipated critical situations, enforced safety standards, and included reasonable checks. This is the type of output that really respects real-world development standards.

Task 3: Analytical Synthesis of Long Content

What is tested is: Information compression, systematic summarization, and reasoning in context.

Notify:

“Below is the business report of the transaction:

Company: NovaGrid AI

2022 Revenue: $12M

2023 Net worth Price: $28M

2024 Net worthPrice: $46M

Customer turnover increased from 4% to 11% by 2024.

R&D spending increased by 70% by 2024.

The operating ratio decreased from 18% to 9%.

Business customers grew by 40%.

SMB customers are down 22%.

Cloud infrastructure costs have doubled.

Job:

1. Identify the most likely causes of margin decline.

2. Identify strategic risks.

3. Recommend 3 actions based on the data.

4. Present your answer in a well-organized memo format.

“

Answer:

Linked financial signals, operational shifts, and strategic risks to a coherent management narrative. The ability to identify margin pressure while measuring growth signals demonstrates strong business acumen. It felt like something a strategy expert would write, not a standard summary.

Note: I didn’t use the usual “Create dashboard” functions as the most recent models like Sonnet 4.6, Kimi K 2.5, can easily create it. So it won’t pose much of a challenge to a model who knows this.

How to get Gemini 3.1 Pro?

Unlike previous Pro models, the Gemini 3.1 Pro is freely available to all users in a location of their choice.

Now that you have decided to use the Gemini 3.1 Pro, let’s see how to access the model.

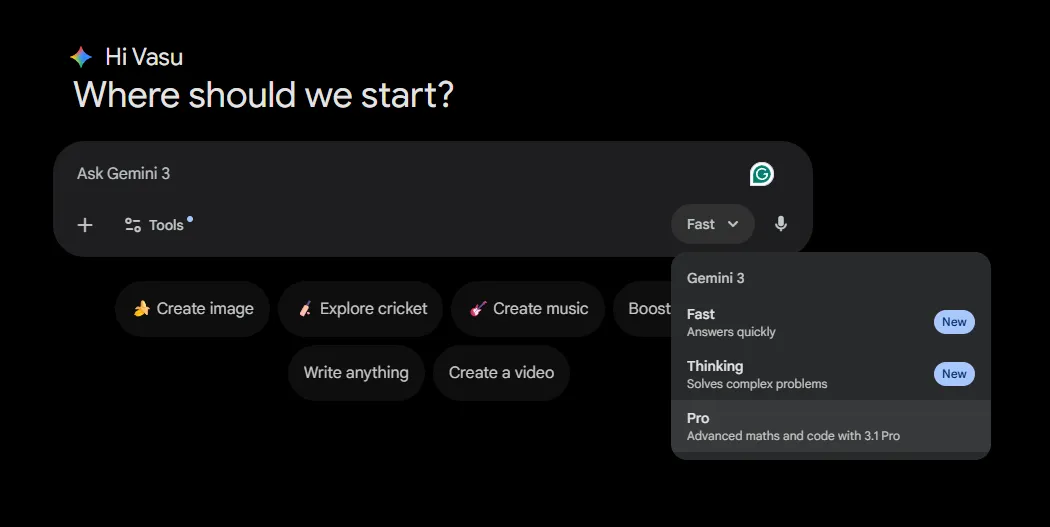

- Gemini Web UI: Free and Gemini Advanced users now have 3.1 Pro available under the model category option.

- API: Available with Google AI Studio for developers (models/Gemini-3.1-pro).

| Model | Basic Input Tokens | 5m Archives | 1h Cache Writes | Archive and Update Priority | Issuance of Tokens |

| Gemini 3.1 Pro (≤200 K tokens) | $2 / 1M tokens | ~$0.20–$0.40 / 1M tokens | ~$4.50 / 1M tokens per hour storage | It is not officially written | $12 / 1M tokens |

| Gemini 3.1 Pro (>200 K tokens) | $4 / 1M tokens | ~$0.20–$0.40 / 1M tokens | ~$4.50 / 1M tokens per hour storage | It is not officially written | $18 / 1M tokens |

- Cloud Platform: It is powered by NotebookLM, Google Cloud’s Vertex AI, and Microsoft Foundry.

Measurements

To measure how good this model is, benchmarks can help.

There is a lot to explain here. But the most surprising development is certainly in the Abstract thinking puzzle.

Let me put things in perspective: The Gemini 3 Pro was released with an ARC-AGI-2 score of 31.1%. This was very high at the time and considered an achievement for LLM standards. Fast forward 3 months, and that point has been eclipsed by its successor double the margin!

This is the rapid speed at which AI models are developing.

If you are not familiar with what these benchmarks test, read this article: AI Benchmarks.

Conclusion: Powerful and Accessible

The Gemini 3.1 Pro proves to be more than just a shiny multimodal model. In every way of thinking, coding, and integrating analysis, it shows real power in parallel with production. It is not perfect and still requires systematic information and human supervision. But as a frontier model embedded in Google’s ecosystem, it is powerful, competitive, and worthy of thorough examination.

Frequently Asked Questions

A. It is designed for advanced thinking, long-range content processing, multimodal understanding, and production-grade AI applications.

A. Developers can access it through Google AI Studio for prototyping or Vertex AI for scale, enterprise deployments.

A. It works powerfully but still requires systematic information and human supervision to ensure accuracy and reduce false positives.

Sign in to continue reading and enjoy content curated by experts.