Security lessons from AgentKit: Guardrails are not an escape card

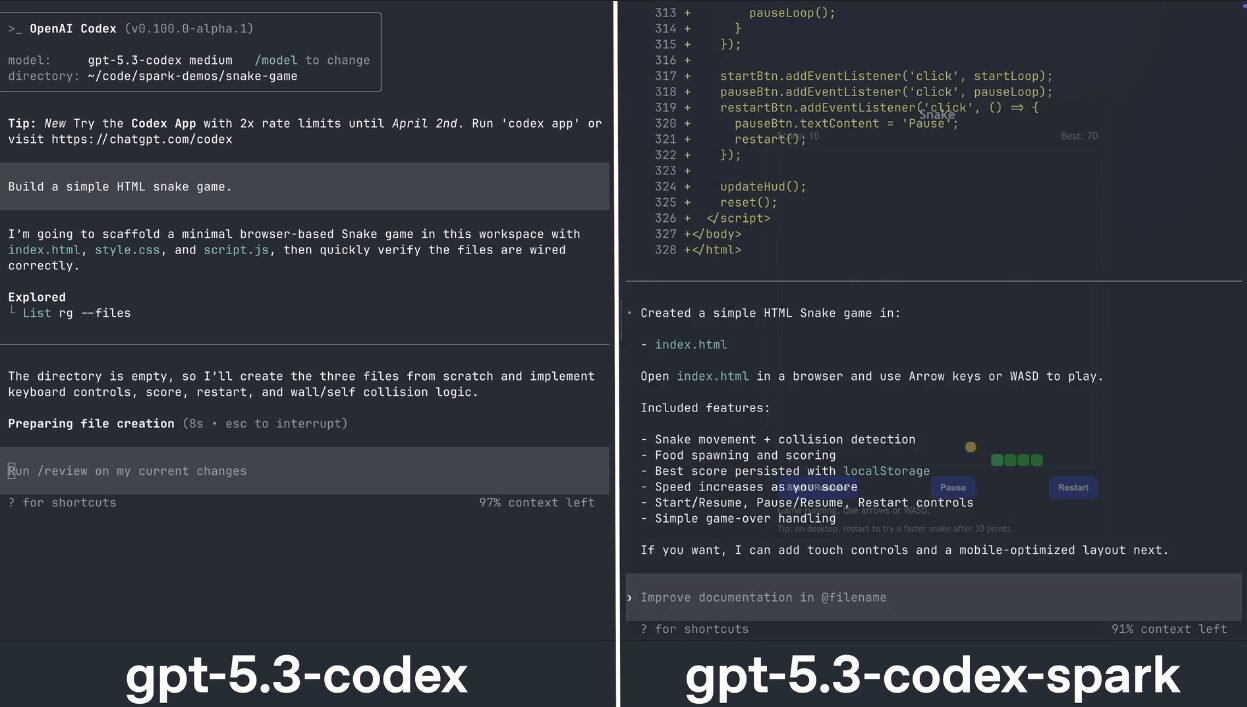

OpenAI’s AgentKit marks a revolution in how developers build agent AI workflows. By packaging everything, from visual workflow design to connector management and frontend integration, into one place, it removes many of the obstacles that once complicated creating an agent.

That accessibility is also what makes it dangerous. Developers can now connect powerful models to company data, third-party APIs, and production systems with just a few clicks. Guardrails are introduced to keep things safe, but they are far from foolproof. For enterprises adopting agent AI at scale, Guardrails alone are not a security strategy; they are the first line.

What AgentKit Guardrails Actually Does

AgentKit includes four built-in tools: PII, hallucination, moderation, and jailbreak. Each is designed to block unsafe behavior before it reaches or leaves the model.

- PII Guardrail looks for personally identifiable information, names, SSNs, emails, etc., using pattern matching.

- Hallucination Guardrail it compares the model results against a trusted vector store and relies on another model to check for true support.

- Guardrail for measurement filters out content that exposes or violates policy.

- Jailbreak Guardrail uses an LLM-based classifier to quickly detect injection or extraction attempts.

These methods reflect design considerations, but each relies on assumptions that do not always hold in a real-world environment. PII Guardrail captures all sensitive data following recognizable patterns, but small variations, such as lowercase names or encoded identifiers, can slip through.

The hallucination guardrail is a soft guard, designed to detect when the model’s responses include unfounded claims. It works by comparing the model’s output against a trust vector store that can be configured through the OpenAI developer platform, and using the second model to determine whether the claims are “supported.” If confidence is high, the response is overwhelming; if it is down, it is flagged or submitted for review. This guardrail assumes confidence equals accuracy, but self-testing of a single model is no guarantee of truth. The moderation filter picks up harmful content in plain sight, looking for obfuscated or multilingual content. And the jailbreak guardrail assumes that the problem has stopped, as conflicting warnings appear daily. This program also relies on one LLM to protect another LLM from the dangers of prison.

In short, these guardrails separate behavior, not fix it. Acquisition without enforcement leaves systems exposed.

The Increasing Risk Landscape

When the guardrails fail, the risk extends beyond text production errors. The AgentKit architecture enables deep communication between agents and external systems through Model Context Protocol (MCP) connectors. That integration enables automation and new compromises, such as:

- Data leakage can occur through rapid injection or misuse of connectors tied to sensitive services such as Gmail, Dropbox, or internal file repositories.

- Credential misuse is another emerging threat: developers manually generating OAuth tokens at wide scopes creates a risk of “information sharing as a service” where a single overly privileged token can expose entire systems.

- There is also extreme autonomy, where a single agent decides and operates across multiple devices. If compromised, it becomes a single point of failure that can read files or change data across all connected services.

- Finally, third-party connectors can introduce untested code paths, leaving businesses dependent on the security integrity of a third-party API or host.

Why Guardrails Are Not Enough for Scale

Guardrails act as useful speed bumps but not barriers. They see, they don’t protect. Many are soft guardrails, probabilistic, model-driven systems that make better guesses than enforce rules. These can fail silently or consistently, giving teams a false sense of security. Even robust monitoring tools such as pattern-based PII discovery cannot anticipate every context or encoding. Attackers, and sometimes ordinary users, can bypass them.

For enterprise security teams, an important note is that OpenAI’s automation is targeted at general security, not a specific organization’s threat model or compliance requirements. A bank, hospital, or manufacturer that uses the same basic protection as a consumer application assumes a level of homogeneity that simply doesn’t exist.

What Mature Agent Security Looks Like

True security requires a layered approach, combining soft, hard, and structured lines of surveillance under a governance framework that spans the agent lifecycle.

That means:

- Strict enforcement of sensitive data access, API calls, and connection permissions.

- Isolation and monitoring so that each agent operates within defined parameters, and its activity can be observed in real time.

- Developer awareness of how to manage tokens, workflows, and RAG resources securely.

- Implementing a policy to ensure that agents cannot act outside of authorized circumstances, regardless of how they are instructed.

In adult environments, guardrails are one layer of a larger control plane that includes runtime authorization, testing, and sandboxing. It’s the difference between a content filter and an actual content strategy.

Security Leaders

AgentKit and similar frameworks will accelerate enterprise AI adoption, but security leaders should resist the temptation to rely on security guards as absolute controllers. The methods introduced by OpenAI are important, but they are mitigations and not preventions.

CISOs and AppSec teams must:

- Treat built-in walls as a single layer in a wide insulation pipe.

- Perform independent threat modeling for each agent use case, especially those that handle sensitive data or information.

- Centralize least-privileged access to all connectors and APIs.

It requires human-in-the-loop authorization and ensures that users understand exactly what they are authorizing. - Monitor and log continuously for drift or abuse.

Agent AI is powerful precisely because it can think, plan, and act. But that independence increases risk. As organizations begin to embed these systems into daily workflows, security cannot rely on arbitrary filters or complete trust in platform automation. Guardrails are a seat belt, not a crash barrier. Real security comes from architecture, governance, and monitoring.