The new role of QA: From bug hunter to validator of AI behavior

Picture this: Testing the new AI-powered code review feature. You submit the same pull request twice and get two different sets of suggestions. Both seem reasonable. Both hold official affairs. But they are different. Your instinct as a QA professional screams “file a bug!” But wait—is this a bug, or is this just how AI works?

If you’ve found yourself in this situation, welcome to the new reality of software quality assurance. The QA playbook we’ve relied on for decades is at odds with the probabilistic nature of AI systems. The sad truth is this: our role isn’t disappearing, but it’s changing in ways that make the country’s bug hunts look relatively uniform.

Where Expected Versus Actual Differences

For years, QA has worked on a simple principle: define expected behavior, run tests, compare actual results with expected results. Pass or fail. Green or red. Binary effects of binary world.

AI systems have completely broken this model.

Consider a customer service chatbot. The user asks, “How do I reset my password?” On Monday, the bot responds with a numbered step-by-step list. On Tuesdays, it provides the same information in a paragraph format in a friendly tone. On Wednesday, it asks a clarifying question first. All three answers are useful. All three solve the user’s problem. None of them are bugs.

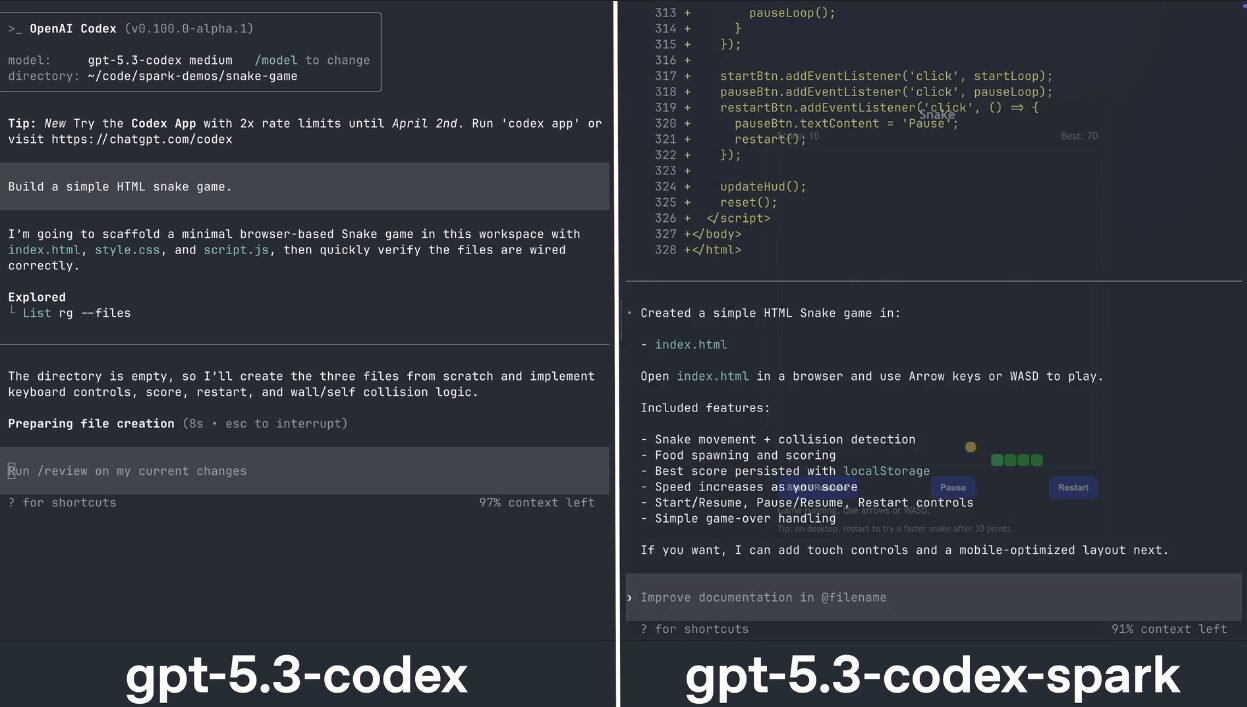

Or take an AI code completion tool. It suggests a variety of different terms, different approaches to the same problem, different levels of development depending on a context we don’t see at all. A code review AI may flag problems of a different style each time it analyzes the same code. Recommendation engines display different products for the same search query.

Traditional QA will mark every inconsistency as a defect. But in the world of AI, consistency of output isn’t the goal—consistency of quality is. That’s a very different goal, and requires a very different approach to testing.

This change has left many QA professionals experiencing a silent ownership issue. If your job has always been to find broken things, what do you do when “broken” becomes difficult?

What We Are Really Testing Now

The important question has shifted from “Does this work?” “Does this work well enough, safely enough, and efficiently enough?” That is at once very important and difficult to answer.

We no longer guarantee specific output. We enforce ethical boundaries. Does AI stay within acceptable boundaries? A customer service bot should never promise a refund that it can’t guarantee, even if the terms are different. A code suggestion tool should never recommend known security vulnerabilities, even if it says the suggestions differently each time.

We test for bias and fairness in ways that never appear in traditional testing systems. Does resuming AI tests always drop students into certain schools? Does the loan approval system treat similar applicants differently based on zip code patterns? These are not bugs in the traditional sense, the code is working as designed. But it is a quality failure that QA should catch.

Edge cases range from limiting to infinite. You can’t account for every instruction someone might give a chatbot or every situation a coding assistant might face. Risk-based assessment is no longer just smart, it’s the only viable option. We have to identify what could go wrong in the worst possible ways and focus our limited testing power there.

User trust has become a quality metric. Does AI explain its thinking? Does it accept uncertainty? Can users understand why it made a particular recommendation? These questions about transparency and user experience are now in the QA domain.

Then there’s the testing of enemies, deliberately trying to make the AI behave badly. Rapid injection attacks, jailbreak attempts, attempts to extract training data or outbound manipulation. This red team concept is something that many QA teams have never needed before. Now it’s important.

New QA Skill Stack

Here’s what QA professionals need to develop, and I won’t say much.

You need a working understanding of how AI models behave. Not the math behind neural networks, but the idea of why LLM might see things that aren’t there, why a recommender system might get stuck in a filter bubble, or why model performance degrades over time. You need to understand concepts like temperature settings, context windows, and token limits the same way you once understood API rate limits and database transactions.

Agile engineering is now an experimental skill. Being able to create inputs that investigate boundary conditions, expose biases, or trigger unexpected behavior is important. The best QA developers I know maintain problem libraries the way we used to maintain regression test suites.

Mathematical thinking must replace binary thinking. Instead of “pass” or “fail,” you examine the distribution of results. Is AI accuracy acceptable across demographic groups? Are their mistakes systematic or patterned? This requires comfort with concepts that most QA professionals don’t need since college math, if at all.

Cross-functional collaboration has become stronger. You can’t effectively test AI systems without talking to the data scientists who built them, understanding the training data, knowing the limitations of the model. QA can no longer act as quality police, we must be embedded partners who understand the technology we are certifying.

New tools are emerging, and we need to learn them. LLM output evaluation frameworks, bias detection libraries, AI behavior monitoring platforms in production. The tools ecosystem is still immature and fragmented, meaning we often have to build our own solutions or adapt tools designed for other purposes.

Opportunity in Chaos

If this all sounds overwhelming, I understand. The skills gap is real, and the industry is moving faster than most training programs can keep up with.

But here’s the thing: The primary purpose of QA hasn’t changed. We’ve always been the last line of defense between problematic software and the people who use it. There were always those who asked “but what if…” when everyone was ready to be sent. We’ve always considered counterfactual, hypothetical failure scenarios, and advocated for users who can’t advocate for themselves in planning meetings.

This power is more important now than ever. AI systems are powerful but unpredictable. They can fail in subtle ways that developers miss. They can cause damage to the scale. The role of QA is not shrinking, it is becoming more strategic, more complex, and more important.

Adaptation teams will find themselves at the center of critical discussions about what responsible AI deployment looks like. QA professionals who develop these new skills will be in high demand, because there are very few people who can bridge the gap between AI skills and quality assurance rigor.

My advice? Start small. Choose one AI feature that your team is building or using. Go beyond the happy path. Try to break it down. He tried to confuse you. Try to make it behave badly. Write down what you read. Share it with your team. Build from there.

QA evolution happens whether we are ready or not. But evolution is not extinction, it is adaptation. And the professionals who depend on this change will not just survive; they will define what quality means in the age of AI.