NVIDIA AI Brings Nemotron-3-Nano-30B to NVFP4 and Quantization Aware Distillation (QAD) for Virtual Reality

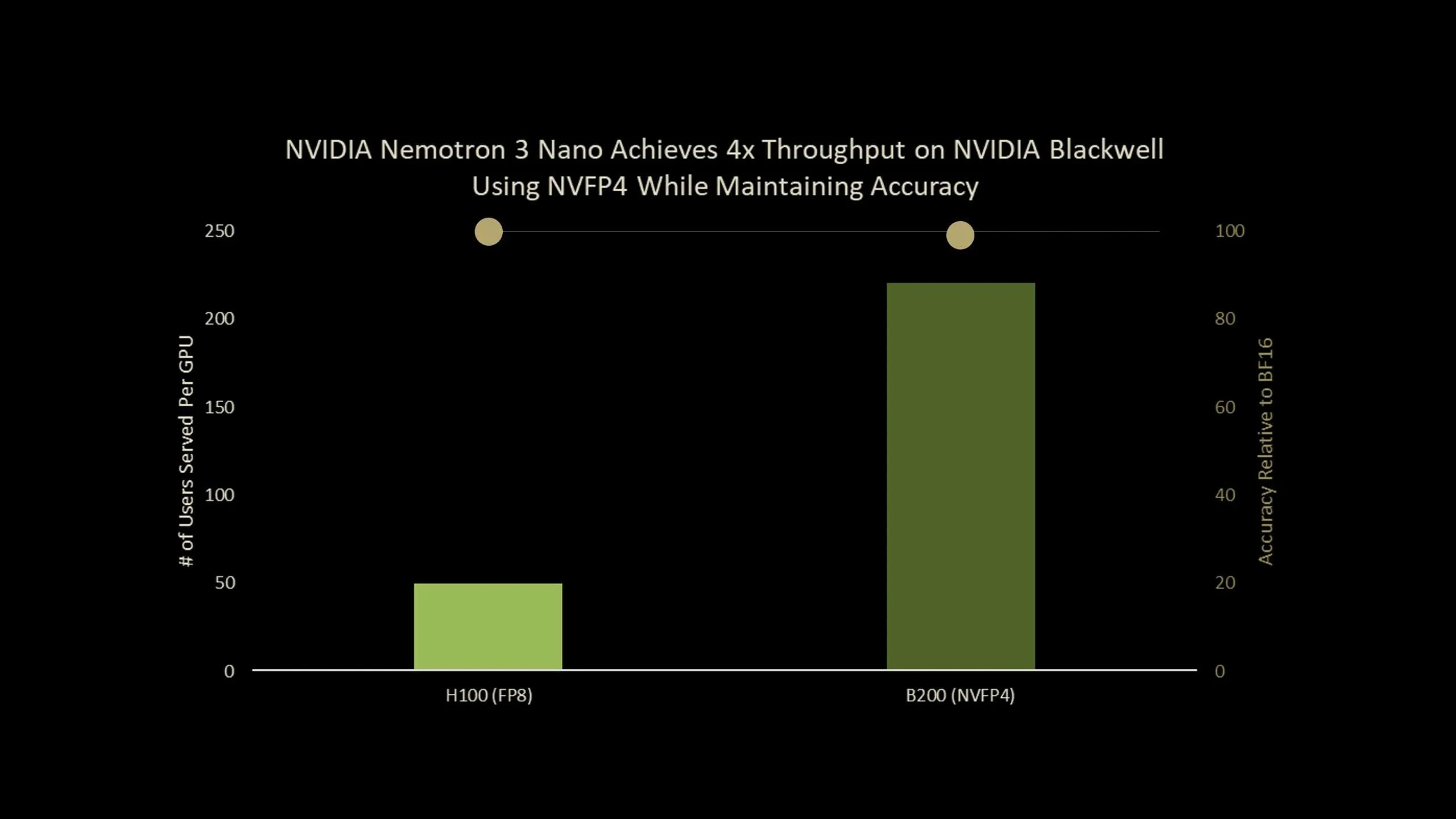

NVIDIA has been released Nemotron-Nano-3-30B-A3B-NVFP4a production test facility that uses a 30B parametric reasoning model in it 4 bit NVFP4 format while maintaining accuracy close to its BF16 base. The model includes a hybrid Mamba2 Transformer Professional Mix properties with Quantization Aware Distillation (QAD) The recipe is designed specifically for NVFP4 deployment. All in all, a high-performance NVFP4 accurate version of the Nemotron-3-Nano that delivers up to 4x higher output than the Blackwell B200.

What is Nemotron-Nano-3-30B-A3B-NVFP4?

Nemotron-Nano-3-30B-A3B-NVFP4 quantized version Nemotron-3-Nano-30B-A3B-BF16trained from the beginning by the NVIDIA team as a model of collective thinking and discussion. It is built as Hybrid Mamba2 Transformer MoE network:

- 30B total parameters

- 52 layers deep

- 23 Layers of Mamba2 and MoE

- 6 questions grouped into layers of attention and 2 groups

- Each MoE layer has 128 routing experts and one shared expert

- 6 experts are active per token, providing approximately 3.5B active parameters per token

The model is pre-trained 25T tokens using a Warmup stable decay a read rate schedule with a cluster size of 3072, a maximum read rate of 1e-3 and a minimum read rate of 1e-5.

Post training follows a 3-stage pipeline:

- Supervised fine tuning on synthetic and selective coding data, math, science, typing, following instructions and structured results.

- Reinforcement teaching with GRPO compatible with the use of multi-step tools, multi-variable dialog and structured environments, and RLHF with a reward generation model.

- Post training quantization in NVFP4 with FP8 KV cache and high precision architecture, followed by QAD.

The NVFP4 testbed stores attention layers and Mamba layers that feed on BF16, balances the remaining layers in NVFP4 and uses FP8 in the KV cache.

The NVFP4 format and why it matters?

NVFP4 it’s a 4 bit floating point a format designed for both training and specification on the latest NVIDIA GPUs. Main features of NVFP4:

- Compared to FP8, NVFP4 delivers 2 to 3 times higher arithmetic.

- Reduces memory usage by approx 1.8 times with weights and activation.

- Extends MXFP4 by reducing the block size from 32 to 16 and introduce to measure two levels.

A two-point scale is used E4M3-FP8 measurements per block and a FP32 scales per tanner. The small block size allows the quantizer to adapt to local statistics and the dual scaling increases the dynamic range while keeping the measurement error low.

For very large LLMs, it is easy post training quantization (PTQ) in NVFP4 already provides decent accuracy in all benchmarks. In small models, especially those with high post pipes, the research team notes that PTQ causes negligible accuracy decreaseswhich promotes a training-based recovery approach.

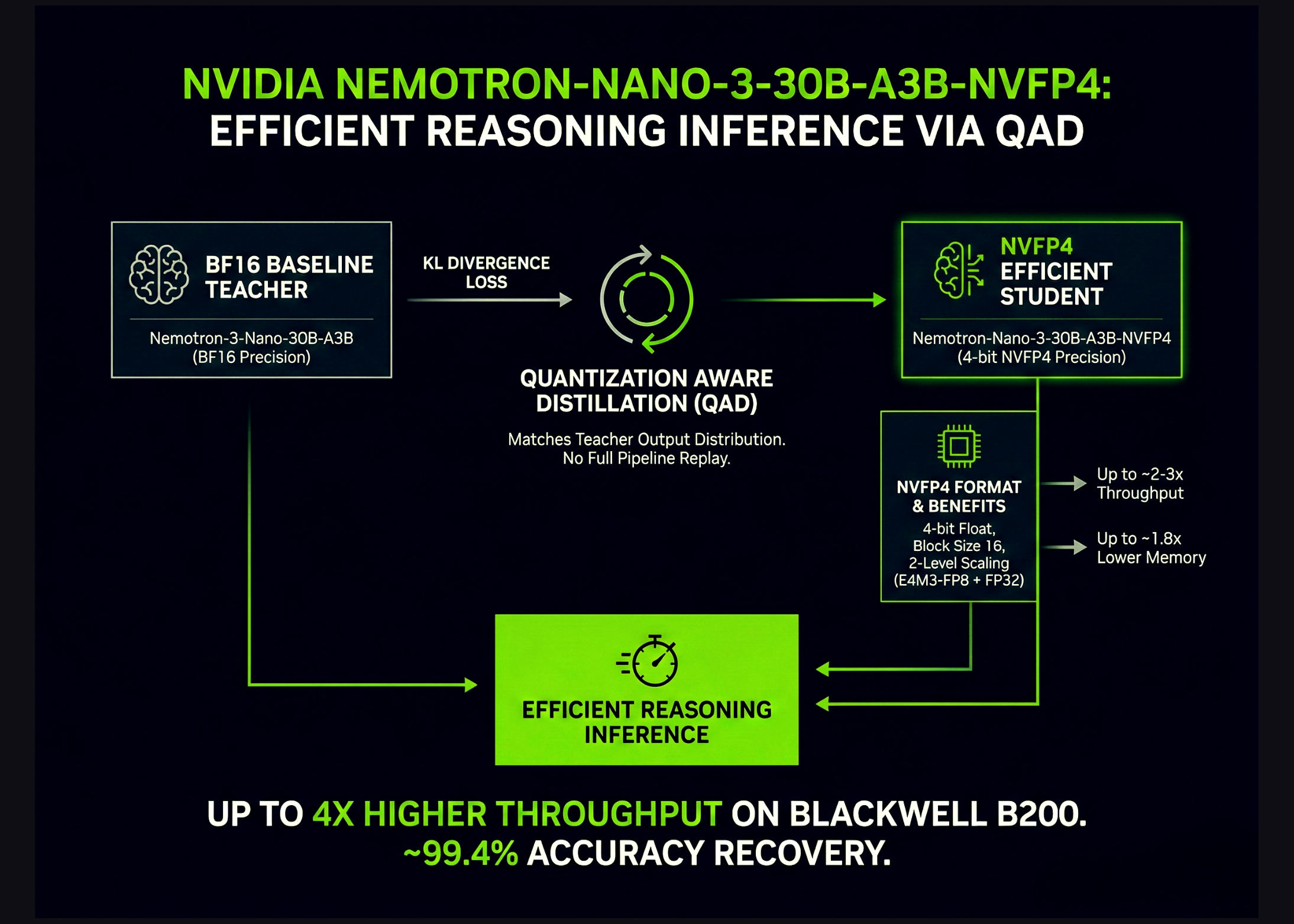

From QAT to QAD

General Quantization Aware Training (QAT) applies pseudo quantization to the passband and reuses the loss of real workas the next token of cross entropy. This works well for communication networks, but the research team lists 2 important issues for today’s LLMs:

- Complex multi-stage training pipelines with SFT, RL and clustering models are difficult to reproduce.

- Original training data for open models are often not available.

Quantization Aware Distillation (QAD) it changes purpose instead of a full pipe. Snow The BF16 model works as a teacher and the NVFP4 model is a student. Training reduces KL change between the distribution of their output tokens, not the original supervised or RL purpose.

The research team highlights 3 properties of QAD:

- It matches the estimated model with the most accurate teacher more accurately than QAT.

- It remains stable even when the teacher has gone through several stages, such as supervised fine-tuning, reinforcement learning and model integration, because QAD only tries to match the teacher’s final behavior.

- It works with partial, synthetic or filtered data, because it only needs input text to query the teacher and student, not initial labels or reward models.

Benchmarks on the Nemotron-3-Nano-30B

Nemotron-3-Nano-30B-A3B is one of the heavy duty RL models in QAD research. The table below shows the accuracy for AA-LCR, AIME25, GPQA-D, LiveCodeBench-v5 and SciCode-TQ, NVFP4-QAT and NVFP4-QAD.

Key Takeaways

- Nemotron-3-Nano-30B-A3B-NVFP4 parameter 30B hybrid Mamba2 Transformer MoE model running on 4 bit NVFP4 with FP8 KV cache and a small set of BF16 layers saved for stability, while storing about 3.5B active parameters per token and supporting context windows up to 1M tokens.

- NVFP4 is a 4 bit floating point format with a block size of 16 and two levels of scaling.using E4M3-FP8 for each block scale and FP32 for tensor scale, which provides about 2 to 3 times higher arithmetic and about 1.8 times lower memory cost than FP8 for weights and activations.

- Quantization Aware Distillation (QAD) replaces the original loss of function by separating the KL to the frozen BF16 teacher.so that the NVFP4 learner can directly match the teacher’s distribution without replaying the SFT, RL and pipelining model or needing real reward models.

- Using the new Quantization Aware Distillation method, the NVFP4 version achieves 99.4% accuracy for BF16

- In AA-LCR, AIME25, GPQA-D, LiveCodeBench and SciCode, NVFP4-PTQ shows a noticeable loss of accuracy and NVFP4-QAT deteriorates further.while the NVFP4-QAD returns performance to near BF16 levels, it narrows the gap to a few points in all of these memory and writing benchmarks.

Check it out Paper and Model Weights. Also, feel free to follow us Twitter and don’t forget to join our 100k+ ML SubReddit and Subscribe to Our newspaper. Wait! are you on telegram? now you can join us on telegram too.